Holicity offers a different way of a reconstructing a real-world environment in a way computers can recognise and understand. This seemingly trivial task is one of the most fundamental and yet challenging problems in computer vision. Variety of automated remote-sensing methods exist – from pixel-matching photogrammetry, AI filtered laser scanning and lately researchers used neural networks for machines to understand and interpret objects in urban environment.

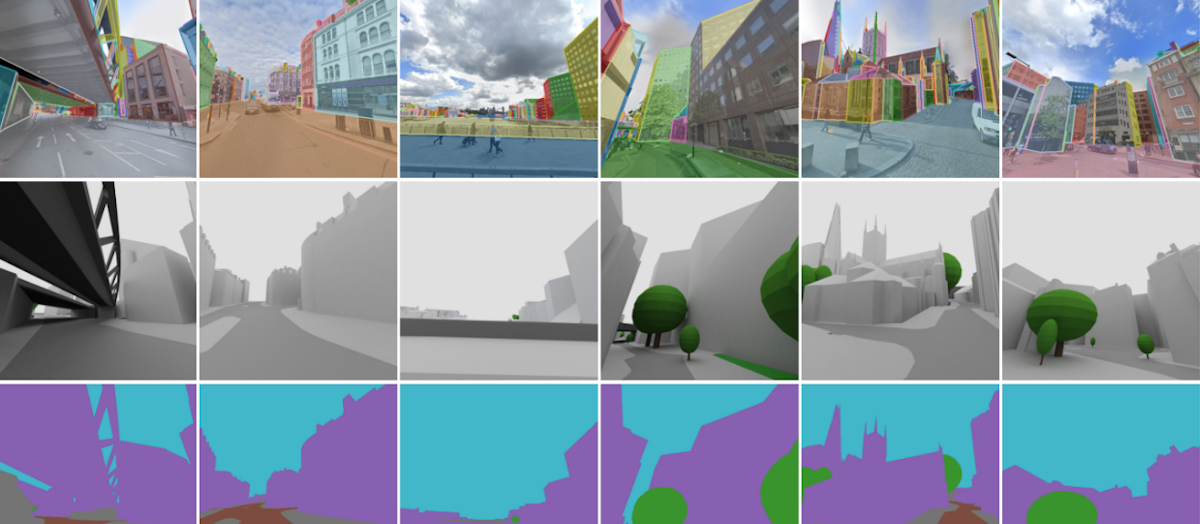

Taking a completely different approach, researchers Yichao Zhou (University of California, Berkeley), Jingwei Huang (Stanford University), Xili Dai (UESTC), Linjie Luo (Bytedance), Zhili Chen (Bytedance) and Professor Yi Ma (UC Berkeley) developed HoliCity, a City-Scale Dataset for Machine Learning Holistic 3D Structures. Their approach is to teach the system to understand ANY environment. To do this they teach the system by showing it the same scene in a variety of formats. The prediction is that this system – when deployed in an unknown city – will recognise its surroundings better.

HoliCity: A City-Scale Dataset for Learning Holistic 3D Structures

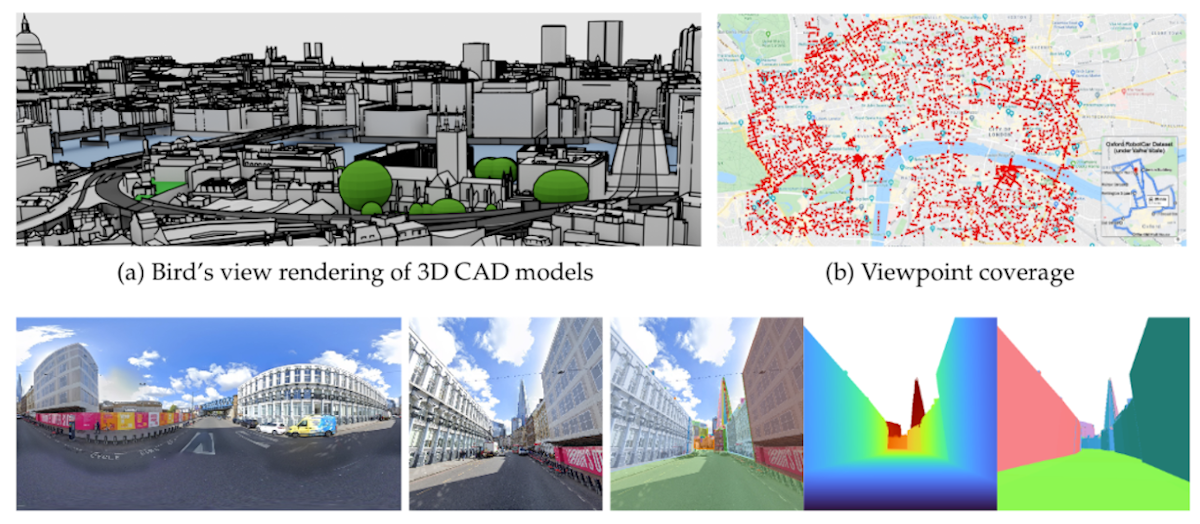

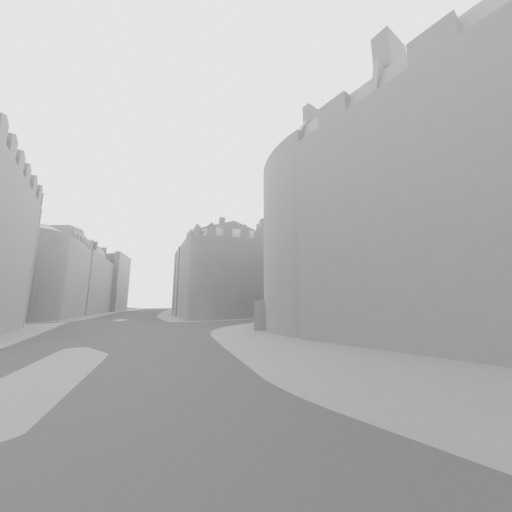

HoliCity is a large-scale dataset of high-resolution street-view panorama images that are accurately aligned with city CAD models for learning holistic 3D structures. Currently this dataset consists of 6,300 real-world high-resolution panoramas aligned with 3D CAD models for a city area of 20 km2. HoliCity provides rich, accurate, and high-level 3D abstraction of the city hence enables learning and inferring holistic structures from real images, with the ultimate goal of supporting real-world applications such as city-scale localization, reconstruction, mapping, and augmented reality.

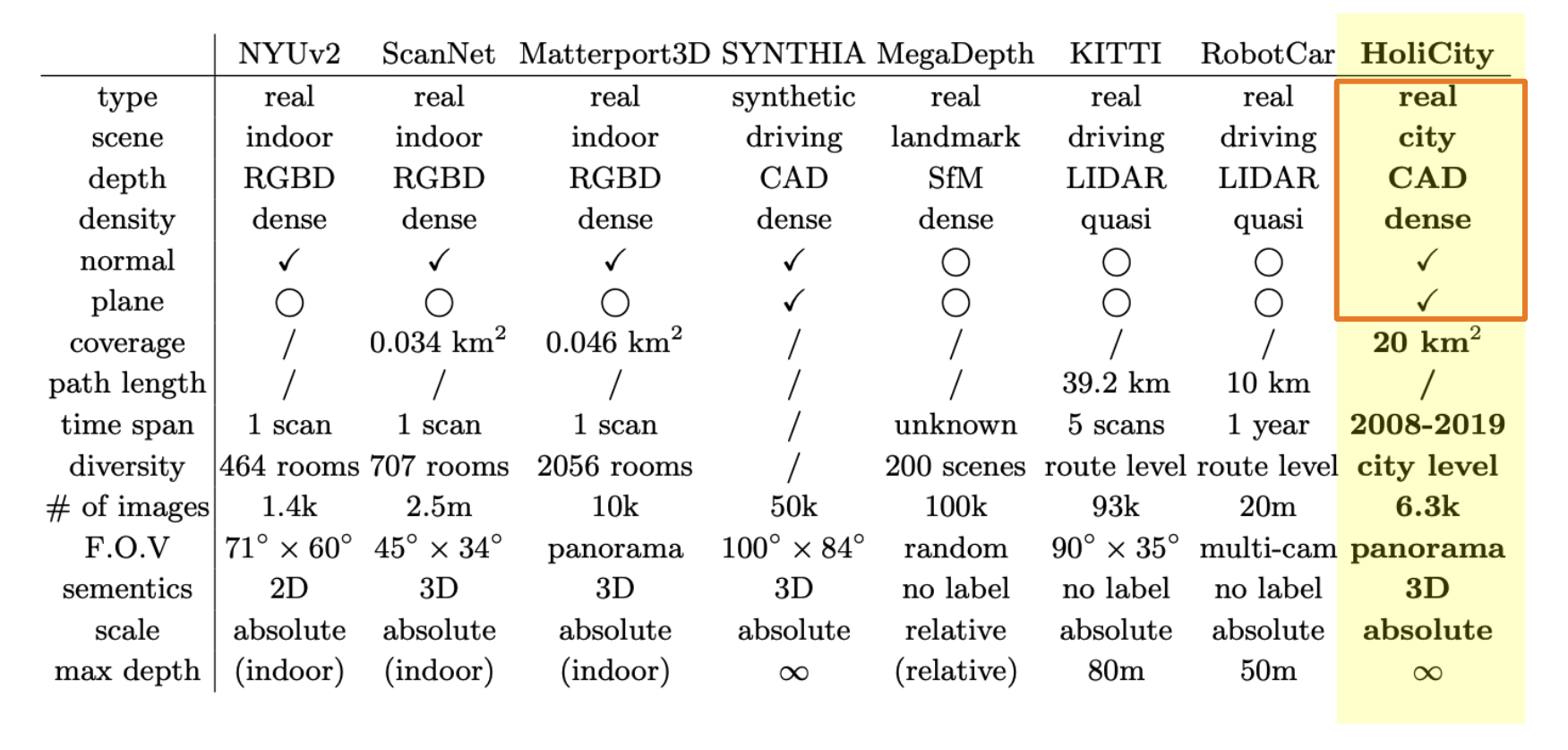

Comparing HoliCity dataset with similar projects

Although the project can be characterised as groundbreaking, it is not the first of its kind. However, previous attempts were of a limited success due to various factors.

“The problem with data-driven approach to machine learning is that you absolutely do need the highest quality data – and lots of it.” argues Yichao Zhou, researcher from UC Berkeley. “Methodology, experimenting and coding are of course integral parts of this process, but in my opinion a large area of CAD city model and corresponding RGB imagery are an absolute must. That’s why it was so welcome that AccuCities supported this project with their 3D London city data. The accuracy and detail of the 3D city model was absolutely crucial for the method to work. There is still much to be done, but the project clearly illustrates that it is possible to teach the system so it can make better plane detection and depth estimation. We can also conclude an encouraging level of transferability of the systems pre-trained using Holicity.”

AccuCities 3D city data available for further research

AccuCities has a proud record of supporting students and researchers with our 3D city data. We offer a large free 3D model of London that covers 1 square kilometer and smaller samples of Cardiff, Bristol and Dublin city models. Students and not-for-profit / research projects can get discounts of up to 80% off our library models as a standard. And of course, for projects that are of special interest to us we can go even further.

“From our perspective, Holicity is kind of a unicorn project – we see huge potential to disrupt and enhance our very industry.” says Michal from AccuCities. “Using large datasets to enhance machine learning so systems can better understand what they are looking at in urban environment? Of course we want to be part of it! I think Sandor has already agreed to release more data to be used in the project and there is a discussion about how to make the data available for subsequent R&D. I cannot tell exactly how things will pan out but any researcher or organisation that wants to build on this project should by all means contact us. In fact, guys behind this project are organising a Holistic 3D Vision Challenge (Plane Detection Track) @ ECCV 2020. If this is something you would be interested, do check it out.”

“Agree, do check it out and do speak to us.” adds Sandor from AccuCities “All the real-time automated remote sensing applications have issues and just don’t work well as a systems. Teaching machines to recognise objects might be the holy grail of AI Augmented and Mix reality applications. I don’t say this often but if you have any idea what we are talking about in this article, get involved and get in touch.”

Big thank you to all involved for all your effort getting this done. Excellent work. We are all big fans in the office :)

Disclaimer: The street-view images are owned and copyrighted by Google Inc. The refinement of images’ geographic information and rendering of CAD models are done by UC Berkeley. For any commercial or profitable usage of the datasets, one must seek explicit permissions from each of these rightful owners.

Acknowledgement: Holicity is partially supported by research grants from Sony Research, Berkeley EECS, Berkeley FHL Vive Center for Enhanced Reality, and collaboration with Bytedance Research Lab (Silicon Valley).